A recent customer of mine had deployed a Machine Learning Model developed using Databricks. They had correctly used MLFlow to track the experiments and deployed the final model using Serverless ML Model Serving. This was deemed extremely useful as the serverless capability allowed the compute resources to scale to zero when not in use shaving off precious costs.

Once deployed, the inferencing/ scoring can be done via an HTTP POST request that requires a Bearer authentication and payload in a specific JSON format.

curl -X POST -u token:$DATABRICKS_PAT_TOKEN $MODEL_VERSION_URI \

-H 'Content-Type: application/json' \

-d '{"dataframe_records": [

{

"sepal_length": 5.1,

"sepal_width": 3.5,

"petal_length": 1.4,

"petal_width": 0.2

}

]}'This Bearer token can be generated using the logged in user dropdown -> User Settings -> Generate New Token as shown below:

In Databricks parlance, this is the Personal Access Token (PAT) and is used to authenticate any API calls that are made to Databricks. Also, note that lifetime of the token (defaults to 90 days) and is easily configured.

The customer had the Data Scientist generate the PAT and use it for calling the endpoint.

However, this is an anti-pattern for security. Since the user is a Data Scientist on the Databricks workspace, the PAT would get access to the workspace and allow a nefarious actor to achieve higher privileges. If a user’s account is decommissioned, the user loses access to all Databricks objects. This user based PAT was flagged by the security team and they were requested to replace it with a service account as is a standard practice in any in-premise system.

Since this is an Azure deployment, the customer’s first action was to create a new user account in the Azure Active Directory, add the user to the Databricks workspace and generate the PAT for this “service account”. However, this is still a security hazard given the user has interactive access to Databricks workspace.

I suggested the use of Azure Service Principal that would secure the API access with the lowest privileges. The service principal acts as a client role and uses the OAuth 2.0 client credentials flow to authorize access to Azure Databricks resources. Also, the service principal is not tied to a specific user in the workspace.

We will be using two separate portals and Azure CLI to complete these steps:

- Azure Portal

- Databricks Workspace

In this post, I am detailing the steps required to do the same (official documentation here):

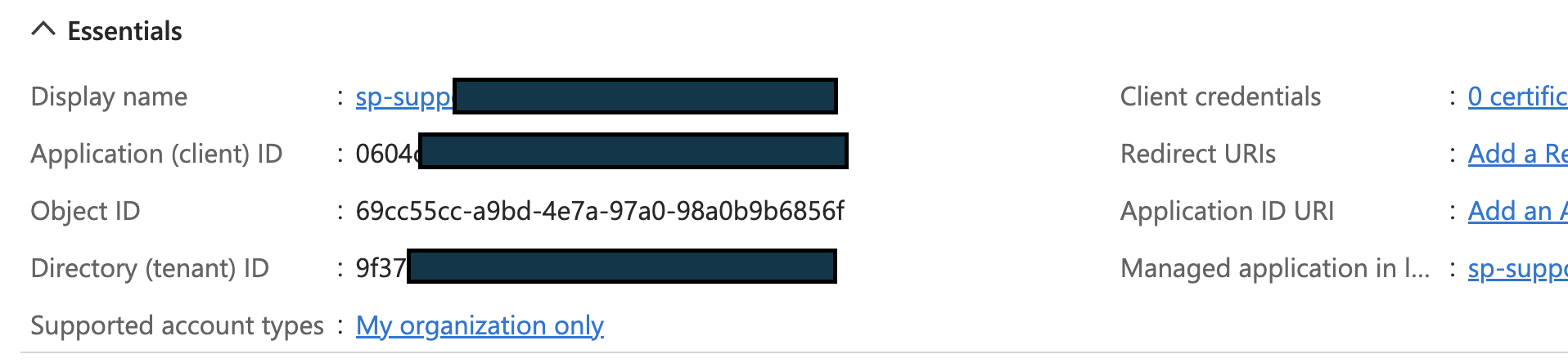

- Generate an Azure Service Principal: Now this step is completely done in the Azure Portal (not Databricks workspace). Go to Azure Active Directory -> App Registrations -> Enter some Display Name -> Select “Accounts in this organisation only” -> Register. After the service principal is generated, you will need its Application (client) ID and Tenant ID as shown below:

- Generate Client Secret and Grant access to Databricks API: We will need to create Client Secret and Register Databricks access.

To generate client secret, remain on Service Principal’s blade -> Certificates & Secrets -> New Client secret -> Enter Description and Expiry -> Add. Copy and store the Client Secret’s Value (not ID) separately. Note: The client secret Value is only displayed once.

Further, In the API permissions -> Add a permission -> APIs my organization users tab -> AzureDatabricks -> Permissions. - Add SP to the workspace: Now, Databricks has the ability to sync all identities created to the workspace if “Identity Federation” is enabled. Unfortunately, for this customer this was not enabled. Next, they needed to assign permission for this SP.

This step in done in your Databricks workspace. Go to Your Username -> Admin Settings -> Service Principals tab -> Add service principal -> Enter Application ID from the Step 1 and a name -> No other permissions required -> Add. - Get Service Principal Contributor Access: Once the service principal has been generated, you will need to get Contributor access to the subscription. You will need help of your Azure Admin for the same.

- Authenticate as service principal: First login into Azure CLI as the Service Principal with the command:

az login \

--service-principal \

--tenant <Tenant-ID>

--username <Client-ID> \

--password <Client-secret> \

--output tableUse the values from Step 1 (tenant ID & Client ID) and Step 2 (Client Secret) here:

- Generate Azure AD tokens for service principals: To generate Azure AD token you will need to use the following command:

az account get-access-token \

--resource 2ff814a6-3304-4ab8-85cb-cd0e6f879c1d \

--query "accessToken" \

--output tsvThe resource ID should be the same as shown above as it represents Azure Databricks.

This will create a Bearer token that you should preserve for the final step.

- Assign permissions to SP to access Model Serving: The Model Serving endpoint has its own access control that provides for following permissions: Can View, Can Query, and Can Manage. Since we require to query the endpoint, we need to grant “Can Query” permission to the SP. This is done using the Databricks API endpoints as follows:

$ curl -n -v -X GET -H "Authorization: Bearer $ACCESS_TOKEN" https://$WORKSPACE_URL../api/2.0/serving-endpoints/$ENDPOINT_NAME

// $ENDPOINT_ID = the uuid field from the returned object Replace @ACCESS_TOKEN with the bearer token generated in Step 6. The @WORKSPACE_URL should be replaced by the Databricks Workspace URL. Finally, the $ENDPOINT_NAME should be replaced by the name of the endpoint created.

This will give you the ID of the Endpoint as shown:

Next, you will need to issue the following command:

$ curl -n -v -X PATCH -H "Authorization: Bearer $ACCESS_TOKEN" -d '{"access_control_list": [{"user_name": "$SP_NAME", "permission_level": "CAN_QUERY"}]}' https://$WORKSPACE_URL../api/2.0/permissions/serving-endpoints/$ENDPOINT_ID$ACCESS_TOKEN: From Step 6

$SP_NAME: Name of the service principal as created in Azure Portal

$WORKSPACE_URL: Workspace URL

@ENDPOINT_ID: Generated in the command above

- Generate Databricks PAT from Azure AAD Token: While you have the Azure AAD token now available and it can used for calling the Model Serving endpoint, the Azure AAD tokens are shortlived.

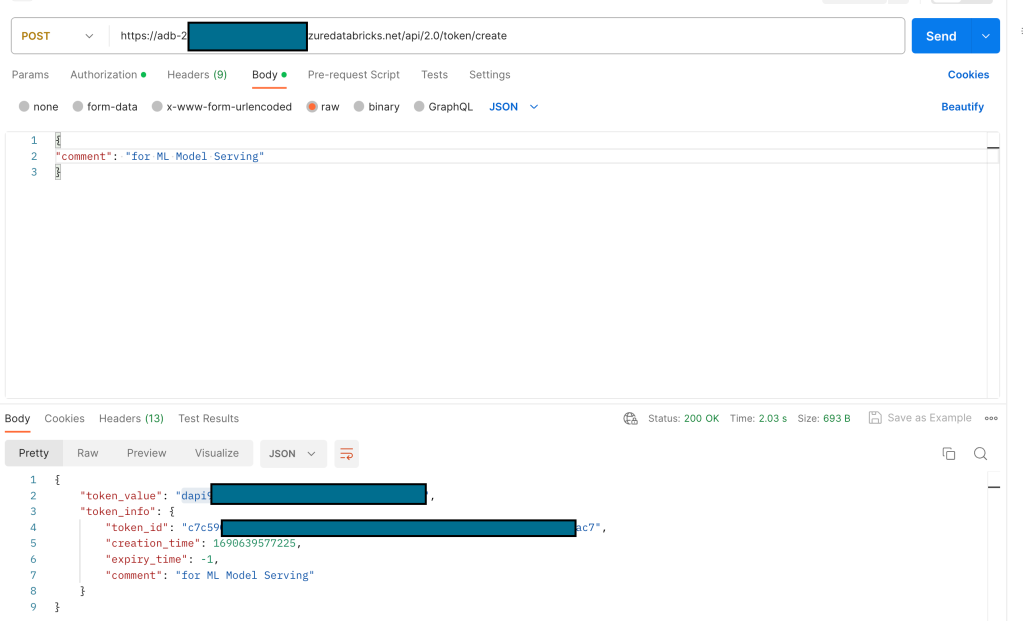

To create a Databricks PAT, you will need to make a POST request call to the following endpoint (I am using Postman for the request): https://$WORKSPAE_URL/api/2.0/token/create

The Azure AAD Bearer token should be passed in the Authorization header. In the body of the request, just pass in a JSON with “comment” attribute.

The response of the POST request will contain an attribute – “token_value” that can be used as Databricks PAT.

The token_value, starting with “dapi” is the PAT that can be used for calling the Model Serving Endpoint that is in a secure configuration using the Service Principal.